Every week brings a new headline about artificial intelligence hurtling us toward a sci-fi future—self-improving systems, AI CEOs, the singularity knocking politely at the door. But what if the real story is a little more… normal?

In a recent manifesto titled AI as a Normal Technology, Princeton computer scientists Arvind Narayanan and Sayash Kapoor propose a refreshingly grounded take: AI isn’t an alien force destined to upend society overnight. It’s a tool. A powerful one, yes—but one that will unfold and integrate slowly, awkwardly, and with all the bureaucratic resistance and human quirks that come with any other technology.

Think electricity. The internet. Even the PC. All of them transformed society—eventually. But not in a single dramatic act. They needed time to spread, to be understood, regulated, and adapted to the real world’s messy realities. Narayanan and Kapoor argue that AI is no different.

Diffusion, not detonation

At the heart of their argument is a key distinction: AI’s capabilities are growing rapidly, but its power—what it actually changes in the real world—is constrained. Not because it lacks potential, but because institutions, industries, and humans don’t transform overnight. Processes are slow. Regulations matter. Incentives aren’t always aligned.

Just because an AI model can write code or summarize a 300-page report doesn’t mean companies will fire teams en masse and hand over the keys. For now, and for quite a while, AI will need supervision, guidance, and a lot of clean-up work.

The workplace isn’t ready for an AI takeover

One of the paper’s most compelling points is that AI adoption is bottlenecked not by the tech itself, but by people and systems. History tells us that even when a technology is clearly useful, the road from invention to transformation is littered with logistical potholes.

And it’s not just about logistics. There are cultural, ethical, and practical hurdles. Organizations still wrestle with questions like: Who’s accountable when an AI makes a mistake? Can you trust a model you didn’t build? Should AI tools get access to sensitive customer data? These are not trivial concerns—and they’re slowing things down in all the right ways.

The real risks aren’t robot uprisings

Narayanan and Kapoor also point out that while sci-fi scenarios about rogue AIs make for thrilling headlines, the risks we face today are more subtle—and in many ways, more dangerous. Things like bias in decision-making, privacy erosion, and the consolidation of power among a few tech giants.

They warn that focusing too heavily on future superintelligences could actually distract from solving the tangible problems that AI is already creating. And worse, they suggest that some of the “solutions” proposed to guard against hypothetical super-AIs—like global authoritarian oversight—might end up being more harmful than the problem itself.

The future is collaborative, not catastrophic

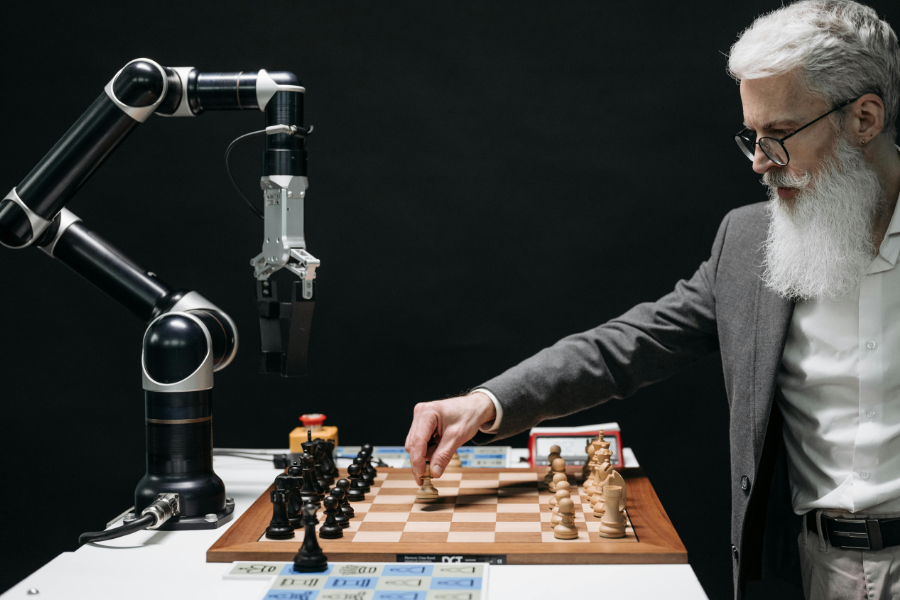

This doesn’t mean AI won’t have a profound impact. It already is. But that impact will be mediated by humans—our choices, our governance, our values. Rather than obsessing over when AI will take over, the better question is: how can we design systems where humans stay in the loop? Where control and accountability don’t vanish into the cloud?

The answer isn’t more hype. It’s more intention. More nuance. More honest conversations about how we integrate AI into the world we actually live in, not the one imagined by science fiction.

And if that sounds a little… boring? That might be a good thing. Because sometimes, the smartest path forward isn’t a revolution. It’s a slow, careful evolution.

Reference:

AI as a Normal Technology, Princeton computer scientists Arvind Narayanan and Sayash Kapoor